|

The Problem

The Maritime

Operational Evaluation Team currently conducts all

its evaluations manually through paper-based forms

and later after the evaluation it is entered into a

computer for reports. There are quite a few problems

with this system:

Propagation of MOET schedule:

At the beginning of the evaluation day a schedule is

given to each of the Subject Matter Experts (SME) to

inform them of the events that will happen during

the day. However the central plan at the moment has

no quick way for each SME to receive a copy of the

updated schedule.

Handwriting

Issue: SME have

a varying degree of handwriting, there are problems

of when it is time to enter data into the computer

and people cannot read what has been written.

Therefore it may cause misinterpretation of

evaluation.

Repetition of Input Data:

Data is effectively being input twice, once to

record information, and second transferring onto the

computer. Thus it is an unnecessary time consuming

process.

Quality

Checking: Editing of

information and Management of what has been written

can be cumbersome and must be done manually.

No

Statistical Analysis of Data Captured: There

is no efficient way of analysing the information

gathered at a quantitative level.

Quantity

of Paper:

The paper based forms, use a vast amount of paper,

which is wasteful of resources as well as generating

a big storage.

The main

objective of the new MOET is to have a modular

digital system that is easy and efficient to use.

Key objectives identified:

Accurate

Analysis of the Current MOET Evaluation

It is

significantly important that we understand the

current way the MOET is run and co-ordinated. With a

clear idea of what needs to happen to improve the

MOET as a whole, we can move on to requirements that

will determine a better solution. Requirement

gathering is a key process to be the foundation in

which we build the new system upon.

Stable

and Reliable System

Writing does

not simply disappear on paper, and the new system

will be no different. It is imperative that the Navy

has a robust, stable and reliable system that SME’s

can be confident that their data is secure once they

enter it. The MOET is a Critical process of the Navy

and we endeavour to have a system that is error and

bug free.

Visually

Acceptable

The PDA’s

screen is small and compact. We will have an

interface that is easy to interact with and enter

information upon. To encourage the use of the new

devices it should be aesthetically pleasing to the

user.

User-Friendliness

The forms

will not have a cluttered aspect which could confuse

the user. We plan to develop a logical navigation

screen which is simple with as little complexity as

possible. The screen will be able to flow easily

from one element to the next.

Well

Documented

The system will need to be used by current and

prospective personnel and a well documented system

will allow quicker training time. The software

should be well documented which will allow for

ongoing enhancement when the project is finished.

DeskTop/Laptop Solution

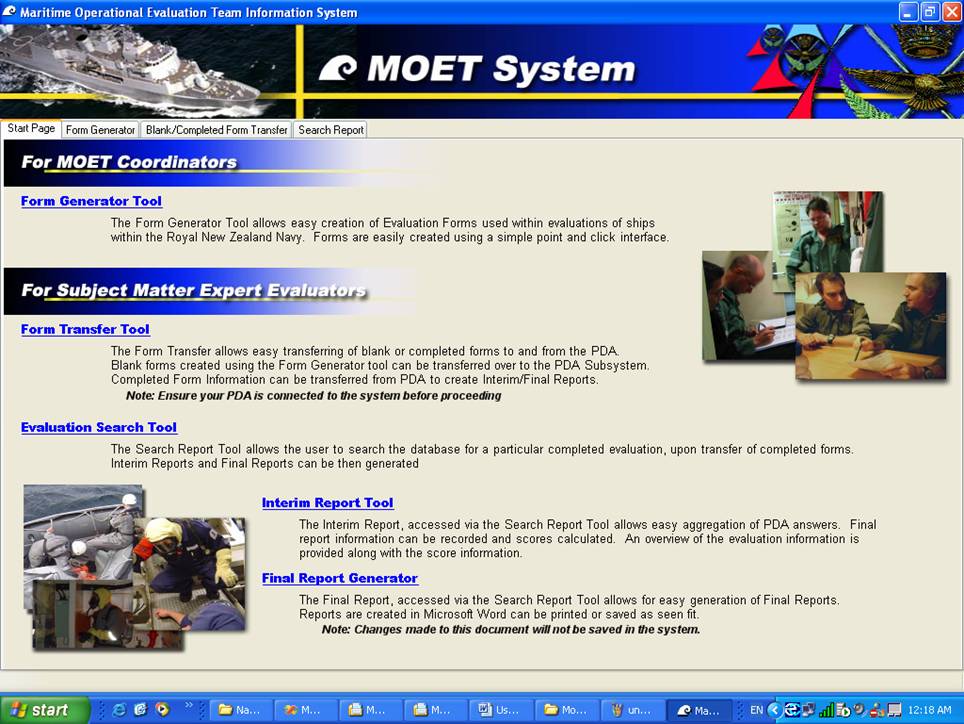

Start Page

The start page is the first

screen the user will see and be able to interact

with. It provides a graphical selection of Tabs to

go to within the MOET system. Clicking on a link

will open up the Tab corresponding to what was

written on the link. It is divided up in to two

parts:

For Coordinators

Contains a link to go to the

Form Generator Tab.

For Subject Matter

Expert Evaluators

Contains links to go to the

Form Transfer Tool, Evaluation Search tool, Interim

Report Tool and Final Report Generator.

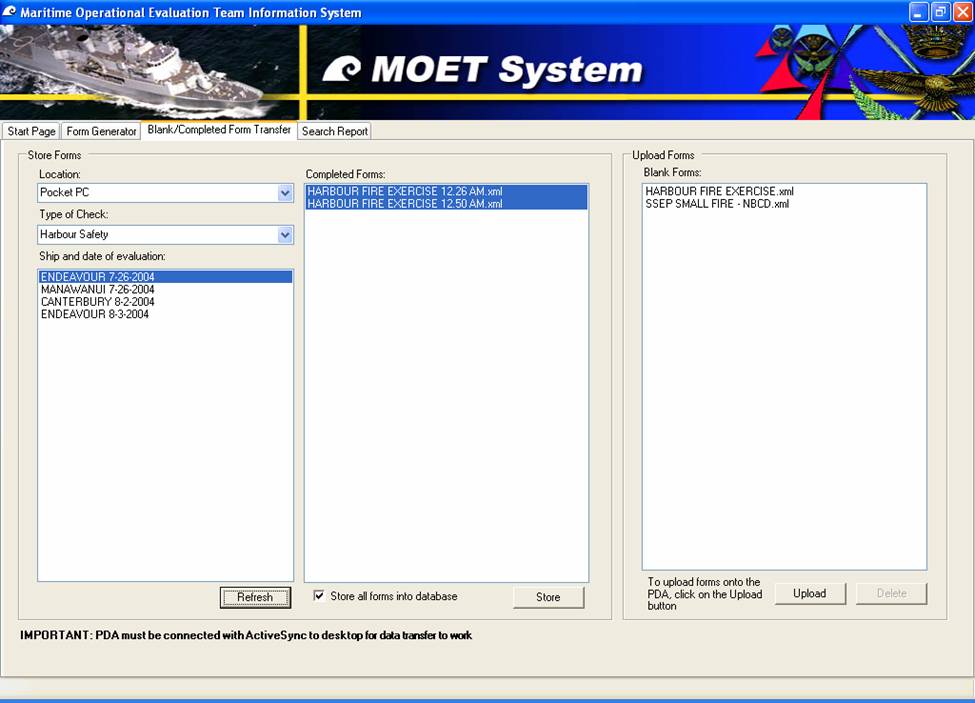

Form Transfer

Blank/Completed Form

Transfer

This tab allows the user to

transfer form data between the PDA device and the

desktop.

It gets a list of completed

forms that is in the connected PDA device and the

user can select which completed evaluation forms to

transfer to the database. It also allows the user to

upload blank forms from the desktop to the PDA

device. However in order for the form transfer to

work the PDA device must be connected to the

desktop.

Note: If the completed

evaluation form has already been stored into the

database and the user selects it to be stored into

the database again it will not be stored to

eliminate duplication of data.

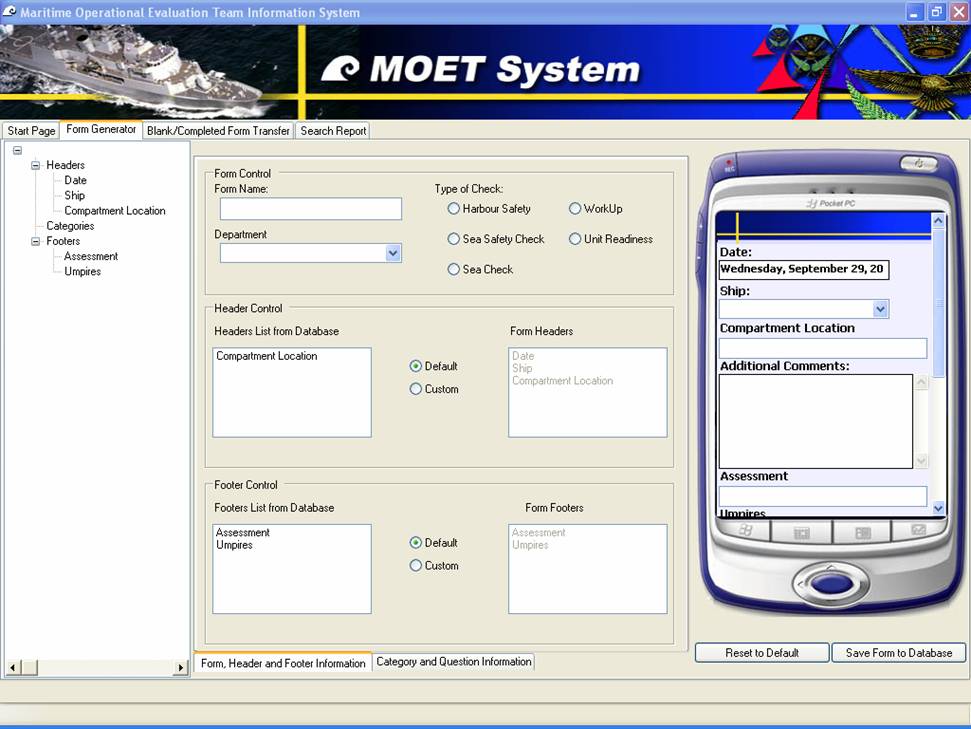

Form Generator

The Form-Generator Tab will

provide the user with an intuitive interface to

create forms from data already stored in the

database, or new custom forms all together. It has a

node view which shows the layout of the Form as well

as a PDA view which shows how the final form will

look on the PDA. Both these views update

automatically when a change occurs in the layout.

The Form Generator will be used

to add any new forms the navy would like to carry

out assessments for. Rather then typing every

question and category out, it can reuse questions or

categories already stored in the Database.

Mouse friendly

utilities have been added

to allow quick form design, including drag and drop

functionality to easily switch the order of

categories and questions on the new form and

intellisense typing to auto complete entering

questions. Tool tip functionality has been added to

show a label of text if the actual text runs over

the controls width.

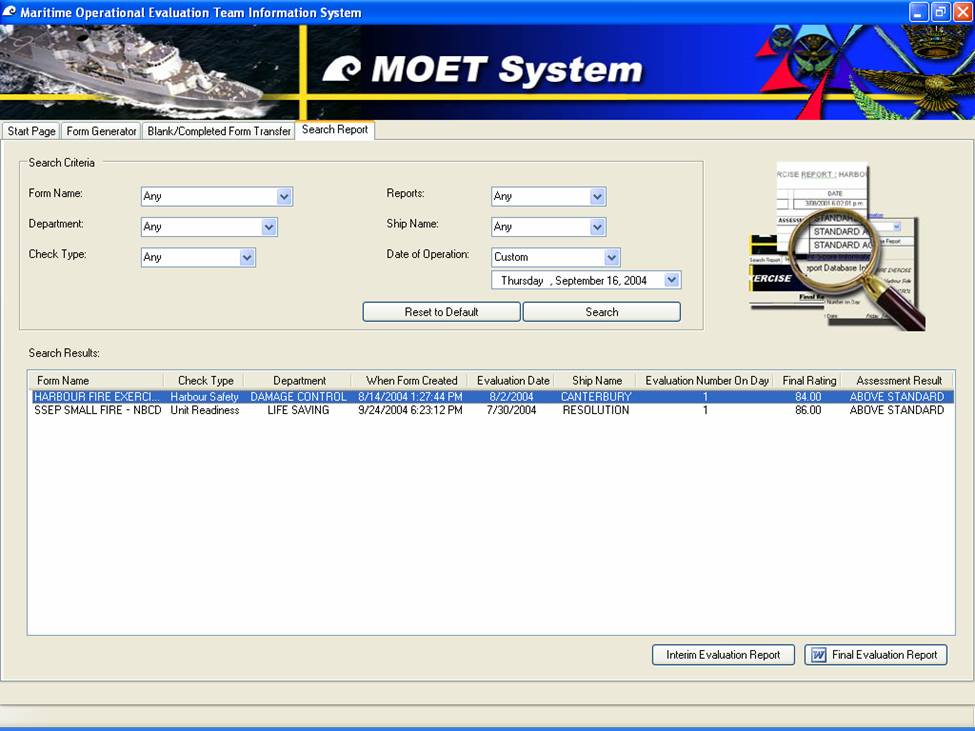

Search Report

The Search Report Tab allows

the user to search the database for a particular

evaluation to generate an Interim Report, or if its

final Report has been stored generate a Word

Document of that report. The user can specify

criteria including Form Name, Department, Check

Type, Reports, Ship Name and Date of Operation using

the Search Criteria Panel and the results will be

displayed in a list view.

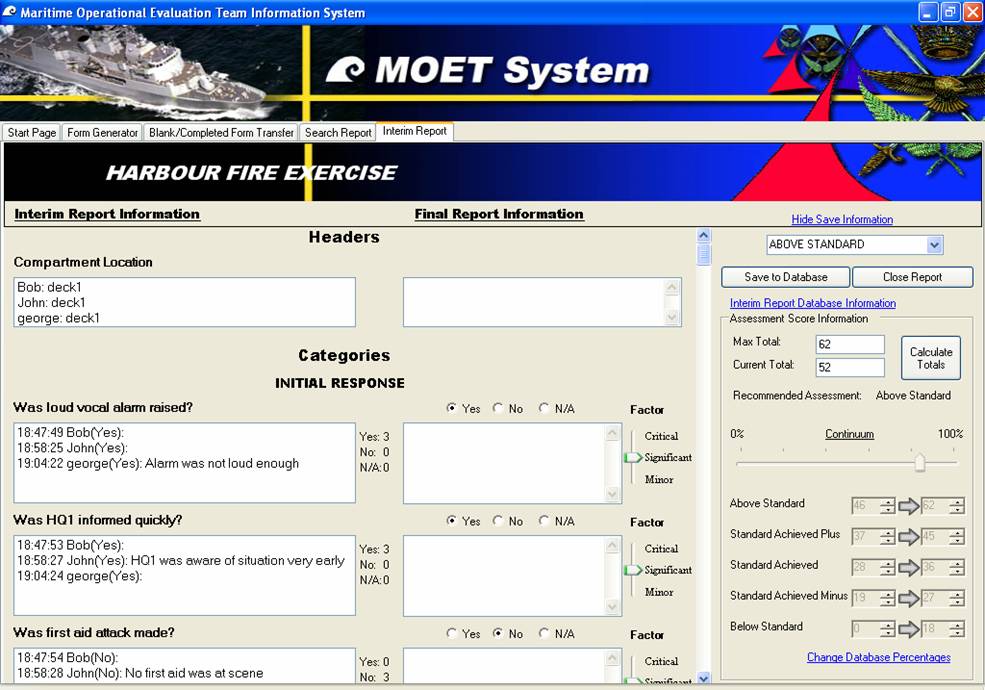

Interim Report

The Interim Report Tab is used

to show the information collected from the

Evaluators PDA on to a singular page. It will create

a view that will show all the evaluators answers and

notes for a particular question. With a Totals for

the amount of Yes, No or N/A answered beside each

question.

There is Final Report

information associated with each question. The

debriefing team will go through each question, and

be able to select their final answer, which has been

defaulted to the highest total shown. They will also

be able to enter final notes in to the Note Boxes;

they can then easily transfer a particular note

written by an evaluator by double clicking that

particular note.

Each question is assigned a

weighting of importance in the database. When the

interim Report is generated a total score is

produced which is found by adding all the questions

times there Critical factor together. N/A questions

will not apply to this total Score.For every

question answered as a ‘Yes’, will add that

questions weighting times the critical factor to a

current score.

From these scores a Continuum

is produced which is out of 100%. Each assessment

Grade has been given a percentage floor which

determines the Minimum mark to achieve this score.

These Assessment Percentages are stored in the

database and can be changed at any time by pressing

the “Change Database Percentages Tab”.

A Recommended Grade will be

displayed on the label from when the user presses

the calculate totals button. This can be used as a

guide to determine the final grade given to the

Assessment.

The Final Report information

along with the Final Grade given is stored in the

Database when the user presses the ‘Save to

Database’ button.

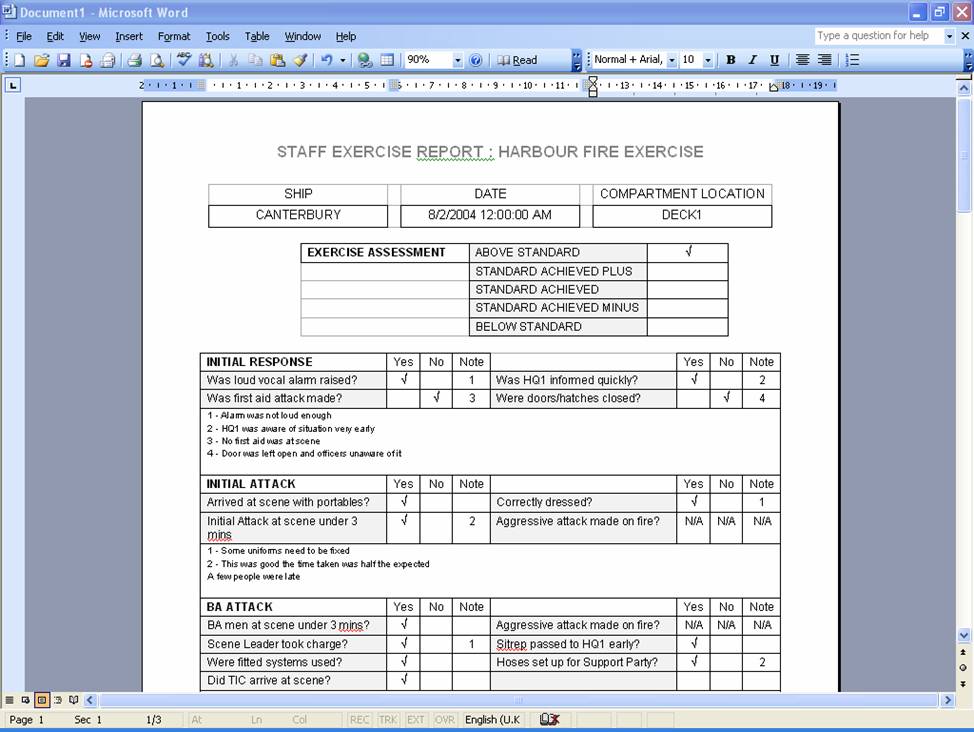

Final Word Document

The Final Report is opened up

in Microsoft Word and the information is

automatically entered into the appropriate template.

The user will only use this to print the report as

any information edited here will not be stored in

the database, preventing accurate trend analysis to

be carried out.

|