The epipolar geometry of a stereo system allows us to search for corresponding points

only along corresponding image lines:

The two above pinhole cameras have the projection centres Ol and

Or, focal lengths fl and

fr,

and image planes πl and πr, respectively.

Each camera identifies a 3D reference frame, the origin of which coincides with the

projection centre, and the Z-axis with the optical axis. The vectors

Pl =

[Xl,Yl,Zl]T

and

Pr =

[Xr,Yr,Zr]T

represent the same 3D point, P, in the left and right camera reference frames,

respectively. The vectors

pl =

[xl,yl,zl]T

and

pr =

[xr,yr,zr]T

express the projections of P onto the left and right image plane, respectively,

in the corresponding reference frame. For all the image points,

zl = fl or zr = fr,

according to the image.

The reference frames of the left and right cameras

are related via the extrinsic

parameters defining a rigid transformation in 3D space defined by a translation vector

T = (Ol − Or) and a

rotational matrix, R. Given a point P in 3D space, the

relation between Pl and Pr.

is as follows:

Pr = R(Pl − T).

The name epipolar geometry appears because

the points el and er

at which the line through the centres of projection intersects the

corresponding image planes are called epipoles. Thus, the left epipole depicts the

projection centre of the right camera and vice versa. If the line through the

centres of projection is parallel to one of the image planes, the corresponding

epipole is the point at infinity of that line.

The relation between a point P in 3D space and its projections

pl and pl onto the 2D image

planes is described by the vector-form equations of perspective projection:

pl = flPl/Zl

and

pr = frPr/Zr.

The plane through the points P, Ol, and Or is

called epipolar plane. It intersects each image in a line, called epipolar line.

Both the lines are called conjugated epipolar lines.

Given a point pl on the left image, the 3D point P can

lie anywhere on the ray from Ol through pl.

Since this ray is depicted in the right image by the epipolar line through the

corresponding point, pr, the true match must lie on the

epipolar line. The mapping between points in the left

image and lines in the right image and vice versa is called the epipolar constraint.

Since all rays include the projection centre, all the epipolar lines of one camera

go through the camera's epipole. With the exception of the epipole, only one epipolar

lines goes through any image point.

The epipolar constraint

restricts the search for the match of a point in one image along the corresponding epipolar

line in another image.

Thus the search for correspondences is reduced to a 1D problem. Alternatively, false matches

due to occlusions can be rejected by verifying

whether or not a candidate match lies on the corresponding epipolar line.

Return to the local table of contents

Return to the global table of contents

To estimate the epipolar geometry, i.e. determine the mapping between points in one

image and epipolar lines in the other image, the equation of the epipolar plane through

a 3D point P is written as the coplanarity condition of the vectors

Pl, T, and Pl−T,

or (Pl−T)T

T×Pl = 0. Here, × denotes the vector

(or outer, or cross) product of two vectors (see its

definition in

Wikipedia).

But

Pl − T =

RTPr

due to the aforementioned relationship

Pr = R(Pl − T)

and the fact that the rotation matrix is orthonormal and thus its inversion

is equivalent to transposition. Thus

(RTPr)

TT×Pl = 0,

or

PrTR

T×Pl = 0.

The vector product T×Pl can be

written as a multiplication by a rank-deficient matrix:

T×Pl = SPl where

.

Therefore,

PrTR

T×Pl =

PrTE

Pl = 0 where the matrix E=RS is called the

essential matrix. By construction, S has always rank 2 so that

the essential matrix also has rank 2.

.

Therefore,

PrTR

T×Pl =

PrTE

Pl = 0 where the matrix E=RS is called the

essential matrix. By construction, S has always rank 2 so that

the essential matrix also has rank 2.

The essential matrix establishes a natural link between the epipolar

constraint and the extrinsic parameters of the stereo system. The equality

PrTE

Pl = 0 is easily rewritten for the image points:

prTE

pl = 0 to indicate that the essential matrix is the

mapping between points and epipolar lines, i.e. the vector

ar = Epl

specifies parameters of the epipolar line

prTar=0

in the right image corresponding to the point pl in the

left image, as well as the vector

alT =

prTE

specifies parameters of the epipolar line

alTpl=0

in the left image corresponding to the point pr in the

right image.

The mapping between points and epipolar lines can be obtained from

corresponding points only, with no prior information on the stereo system.

Let Ml and Mr be the matrices of the

intrinsic parameters of the left and right camera, respectively, that

produce points in pixel coordinates corresponding to points in camera

coordinates:

where

where

are points in homogeneous pixel coordinates.

Therefore,

are points in homogeneous pixel coordinates.

Therefore,

, and the above equation

, and the above equation

with the essential matrix E

yields

with the essential matrix E

yields  .

The matrix F is called fundamental matrix. Just as the

essential matrix, the fundamental matrix has rank 2 (since E has rank 2 and

Ml and Mr have full rank). The essential matrix

encodes only information on the extrinsic parameters of the stereo system, whereas

the fundamental matrix encodes information on both the extrinsic and intrinsic

parameters. Both matrices enable full reconstruction of the epipolar geometry.

.

The matrix F is called fundamental matrix. Just as the

essential matrix, the fundamental matrix has rank 2 (since E has rank 2 and

Ml and Mr have full rank). The essential matrix

encodes only information on the extrinsic parameters of the stereo system, whereas

the fundamental matrix encodes information on both the extrinsic and intrinsic

parameters. Both matrices enable full reconstruction of the epipolar geometry.

The most important difference between these matrices is that the fundamental matrix

is defined for pixel coordinates and the essential matrix is defined for points in

3D camera coordinates. If the fundamental matrix is estimated from a number of

point matches in pixel coordinates, the epipolar geometry can be reconstructed

without information on the intrinsic or extrinsic parameters. Therefore, the

epipolar constraint, as the mapping between points and corresponding epipolar lines,

can be established with no prior knowledge of the stereo parameters.

Return to the local table of contents

Return to the global table of contents

The simplest technique for computing the essential and fundamental matrices

assumes that n; n ≥ 8, point correspondences between the

images are known. Each correspondence produces a homogeneous linear equation

like  for the nine unknown entries

of F. These equations form a homogeneous linear system, and if the

n points do not form degenerate configurations, the nine entries of

F are determined as the nontrivial solution of the system. Since

the system is homogeneous, the solution is unique up to a signed scaling

factor. Typically, n > 8, so that the system is overdetermined and

its solution is obtained using singular value decomposition (SVD) related

techniques

(see Appendix 2). If A is the system's

matrix and A = UDVT,

the solution is the column of V corresponding to the only null singular value

of A. Because of noise, numerical errors and inaccurate correspondences,

A is more likely to be full rank, and the solution is the column of V

associated with the least singular value of A. Because the

estimated fundamental matrix is almost certainly nonsingular, the singularity

constraint can be enforced by adjusting the entries of the estimated matrix

Fest as follows: compute the SVD of the estimated matrix,

Fest = UDVT;

set the smallest singular value on the diagonal of the matrix D equal to

zero; and compute the corrected estimate

F′ = UD′VT,

where D′ is the corrected matrix D.

for the nine unknown entries

of F. These equations form a homogeneous linear system, and if the

n points do not form degenerate configurations, the nine entries of

F are determined as the nontrivial solution of the system. Since

the system is homogeneous, the solution is unique up to a signed scaling

factor. Typically, n > 8, so that the system is overdetermined and

its solution is obtained using singular value decomposition (SVD) related

techniques

(see Appendix 2). If A is the system's

matrix and A = UDVT,

the solution is the column of V corresponding to the only null singular value

of A. Because of noise, numerical errors and inaccurate correspondences,

A is more likely to be full rank, and the solution is the column of V

associated with the least singular value of A. Because the

estimated fundamental matrix is almost certainly nonsingular, the singularity

constraint can be enforced by adjusting the entries of the estimated matrix

Fest as follows: compute the SVD of the estimated matrix,

Fest = UDVT;

set the smallest singular value on the diagonal of the matrix D equal to

zero; and compute the corrected estimate

F′ = UD′VT,

where D′ is the corrected matrix D.

In order to avoid numerical instabilities, the coordinates of

the corresponding points should be normalised so that the entries

of A are of comparable size. Typical pixel coordinates

can make A seriously ill-conditioned. A simple procedure to

avoid numerical instability is to translate the two coordinates of

each point to the centroid of each data set, and scale the norm of

each point so that the average norm over the data set is 1. This can be

accomplished by multiplying each left (right) point in homogeneous

coordinates by two suitable 3×3 matrices, Hl and

Hr. The eight-point algorithm is then used to estimate

the matrix F′ = HrFHl,

and F is obtained as

Hr−1F′Hl−1.

The normalising matrices Hl and Hr are

determined as follows: given a set of points in homogeneous coordinates

pi =

[xi,yi,1]T,

the desired normalising 3×3 matrix H produces the points

pi′ = Hpi such

that pi′ = [(xi-mx)/d,

yi-my)/d,1]T,

where  ,

,  ,

and

,

and  . In this case the average length of

each component of pi′ equals 1.

. In this case the average length of

each component of pi′ equals 1.

The matrices Hl and Hr depend on the

corresponding data centroids, ml = [ml,x,

ml,y, 1]T and

mr = [mr,x,

mr,y, 1]T, and

average lengths, dl and dr, as follows:

The eight-point algorithm for the fundamental matrix F

| Input: n pixel-to-pixel correspondences

{(pl,i =

[xl,i,yl,i,1]T,

pr,i =

[xr,i,yr,i,1]T

):

i = 1,2,...,n}; n≥8

|

| Data normalisation: for

i = 1,2,...,n do {

p′l,i = Hlpl,i;

p′r,i = Hrpr,i }

|

| SVD

A = UDVT

of the n×9 matrix A for the

(overdetermined) system of linear equations Af = 0:

|

|

| The entries of F′ (up to

an unknown, signed scale factor) are the components of the column of V

|

|

corresponding to the least singular value of A.

|

| SVD F′ = UDVT of

F′ in order to enforce the singularity constraint:

|

| Set the smallest singular value

in the diagonal of D equal to 0 to obtain the corrected matrix D′

|

| and

compute the corrected estimate

F" = UD′VT of

the fundamental matrix.

|

| Renormalisation: The output estimate F =

Hr−1F"Hl−1

|

| Outpit: The fundamental matrix, F.

|

Return to the local table of contents

Return to the global table of contents

Since the left epipole el lies on all the epipolar

lines of the left image, the relationship

prTF

el holds for every pr.

But F is not identically zero, so that this is possible if and only if

Fel = 0. Because F has rank 2, it follows

that the epipole, el, is the null space of

F (the null space of a matrix A is the set of all solutions

s to the matrix-vector equation As = 0;

see Wikipedia or

notes of Dr. John Mitchell

from Rensselaer Polytechnic Institute, Troy, NY, USA). Similarly,

er is the null space of

FT.

Accurate localisation of the epipoles is helpful for refining the

location of corresponding epipolar lines, checking and simplifying the stereo

geometry, and recovering 3D structure in the case of uncalibrated stereo.

To determine the location of the epipoles, it is sufficient to find null

spaces of F and FT

using, e.g., the SVD F = UDVT

and FT =

VDUT (notice that there is exactly one

singular value equal to 0 because the eight-point algorithm enforces the

singularity constraint explicitly).

The algorithm for locating the epipoles

| Input: the fundamental matrix F.

|

| SVD F = UDVT

|

| The epipole el is the column of V

corresponding to the null singular value.

|

| The epipole er is the column of U

corresponding to the null singular value.

|

| Output: The epipoles, el

and er.

|

Return to the local table of contents

Return to the global table of contents

Given a pair of stereo images, rectification is defined as a transformation,

or warping of each image such that pairs of conjugate epipolar lines

become collinear and parallel to one of the image axes, usually the

horizontal one. An example below shows an initial and the rectified stereo pair:

|

|

| A stereo pair of images of the same scene

|

|

|

| The rectified stereo pair

|

The rectification reduces generally 2D search for correspondence to a 1D

search on scanlines having the same y-coordinate in both the images.

The image transformation that makes conjugated epipolar lines for a given

stereo pair of images collinear and

parallel to the horizontal image axis can be computed using the known intrinsic

parameters of each camera and the extrinsic parameters of the stereo system.

The rectified images can be thought of as acquired by a new stereo rig, obtained by

rotating the original cameras around their optical centres.

Without losing generality, one can assume that in both cameras (i) the origin

of the image reference frame is the principal point (i.e. the trace of the optical axis),

and (ii) the focal length is equal to f. The rectification algorithm

consists in four steps:

- Rotate the left camera so that the epipole goes to infinity along the

horizontal axis (i.e. the left image plane becomes parallel to the baseline

of the system).

- Apply the same rotation to the right camera to recover the original geometry.

- Rotate the right camera by R.

- Adjust the scale in both camera reference frames.

The rotation matrix Rrect for the first step is built as

follows:

using a triple of mutually orthogonal unit vectors e1,

e2, and e2. Since the problem is

underconstrained, the choice is in part arbitrary. The first vector, e1,

is given by the epipole; since the image centre is in the origin, e1

coincides with the direction of translation T. The only constraint on the second

vector, e2, is that it must be orthogonal to e1.

To this purpose, the cross-product of e1 with the direction vector

of the optical axis (coinciding with the Z-axis) is computed and normalised

to get e2. The third unit vector is unambiguously determined as

e3 = e1 × e21.

The remaining steps are straightforward so that the rectification algorithm

is as follows:

The rectification algorithm

Input: the intrinsic and extrinsic parameters of a

stereo system;

a set of points in each camera to be rectified (which

could be the whole images).

|

| Build the above rotation matrix Rrect.

|

| Set Rl = Rrect and

Rr = RRrect.

|

for each left-camera point,

pl = [x, y, f]T,

compute the coordinates of the corresponding

rectified point, pl′, as

. .

|

| Repeat the previous step for the right camera using

Rr and pr.

|

Output: the pair of transformations to be applied to the two cameras

in order to rectify

the two input point sets, as well as the rectified sets of

points.

|

To obtain integer coordinates to rectify the whole image, the rectification

algorithm should be implemented backwards, i.e. starting from the new image

plane and applying the inverse transformations. The pixel values in the new

image plane are computed by bilinear interpolation of the pixel values in

the old image plane. The rectified image is not in general contained

in the same region of the image plane as the original image. To keep all

the points within images of the same size as the original, one may have to

alter the focal lengths of the rectified cameras.

Return to the local table of contents

Return to the global table of contents

Given the epipolar geometry and pixel correspondences, what the 3-D

reconstruction of a scene observed can be obtained depends on the

amount of a priori knowledge available on the parameters of

the stereo system. There are the following three basic cases:

- Both intrinsic and extrinsic parameters are known - then the

reconstruction problem is unambiguously solved by triangulation.

- Only the intrinsic parameters are known - then the reconstruction

problem is solved and, simultaneously, the extrinsic parameters are

estimated, but only up to an unknown scaling factor.

- Neither the intrinsic, nor extrinsic parameters are known - then

a reconstruction of the environment is still obtained, but up to an unknown,

global projective transformation.

Reconstruction by triangulation is straightforward: given the known

intrinsic and extrinsic parameters, compute the absolute 3-D coordinates of the

points from their projections, pl and

pr. The point P projected into the pair

of corresponding points pl and pr,

lies at the intersection of the two rays from Ol through

pl and Or through pr

respectively. By assumption, the rays are known and the intersection can be

computed. But since parameters and image locations are known only

approximately, the two rays may not actually intersect in space. Their

intersection is only estimated as the point of minimum distance from

both rays.

Let apl, where a is a real

number, be the ray through Ol (a=0) and pl

(A=1) in the left reference frame. Let

T+bRTpr,

where b is a real number, be the ray through Or (b=0) and

pr (b=1) expressed in the left reference frame. The

vector w =

pl×RT is

orthogonal to both the rays. Let

apl + cw, where c is a real

number, be the line through apl (for some fixed a)

and parallel to w. The endpoints,

a0pl and

T+b0RTpr,

of the segment belonging to the latter line

that joins the rays are determined by solving the linear system of equations,

apl -

b0RTpr

+c(pl ×

RTpr) = T,

for the unknown a0, b0, and c0.

The determinant of the coefficients of this system of equations is not equal to zero

and the system has a unique solution if and only if the two rays are not parallel. This

reconstruction can be perfoirmed from rectified images directly, i.e. without going

back to the coordinate frames of the original pair. In this case, one uses the

simultaneous projection equations associated to a 3-D point in the two cameras.

If the geometry of a stereo system does not change with time, the intrinsic and

extrinsic parameters of each camera can be estimated by calibration.

Let Tl, Rl, and

Tr, Rr, be the extrinsic parameters

of the two cameras in the world reference frame. Then the extrinsic parameters

of the stereo system, T and R, are:

R = RlRrT and

T = Tl -

RTTr. It is

easily shown by taking into account that for a point P in the world

reference frame the following relationships hold: Pr =

RrP + Tr and Pl =

RlP + Tl. The relation between

Pr and Pl is given by

Pr = R(Pl - T).

In accord with the former two relationships,

P = RlT

(Pl - Tl) and

Pr =

Rr(RlT

(Pl - Tl) - Tr),

so that Pr =

RrRlT

(Pl -

(Tl -

Rl

RrTTr))

(notice that the rotation matrices are orthogonal, so that their inversion is

equivalent to the transposition).

Reconstruction up to a scale factor involves the essential

matrix, so that by assumption at least n point correspondences,

n>≥8, are established, the intrinsic parameters are known,

and the location of the 3-D points are computed from their projections,

pl and pr. Since the

baseline of the stereo system is unknown, the true scale of the viewed

scene cannot be recovered, and the reconstruction is unique only up to an unknown

scaling factor. This factor can be determined if the distance between two

points in the observed scene is known.

The first reconstruction step requires estimation of the essential matrix,

E, which can only be known up to an arbitrary scaling factor.

From the definition of the essential matrix, it follows that

If the entries of E are divided by the square root of the halved

trace of ETE, it is equivalent

to normalising the length of the translation vector to unit:

Here "hat" indicates the normalised essential matrix and the normalised translation

vector T/||T||. The components of the normalised translation vector are

easily recovered from any row or column of the matrix

.

Then the rotation matrix

is obtained from the normalised essential matrix and translation

vector using simple algebraic computations.

.

Then the rotation matrix

is obtained from the normalised essential matrix and translation

vector using simple algebraic computations.

Reconstruction up to a projective transformation is performed

in the absence of any information on the intrinsic and extrinsic parameters.

It is assumed that only n point correspondences; n≥8, and

therefore the location of the epipoles are given in order to compute the

location of the 3-D points from their projections, pl

and pr.

Return to the local table of contents

Return to the global table of contents

Computational binocular stereo reconstructs 3-D coordinates

of visible points from 2-D coordinates of the corresponding image points.

Image difference, or disparity,

in a stereo pair is the main carrier of 3-D information. Positional differences of

the images of individual 3-D points in the left and right stereo images are measured

by point-to-point or region-to-region matching. Due to different views of stereo

cameras, there are horizontal and vertical disparities of the corresponding points

(see e.g. H. A. Mallot: Stereopsis, Handbook of Computer Vision and Applications,

vol.2, Academic Press, 1999, pp. 485 - 505).

Horizontal, or x-disparity is the difference between the

horizontal coordinates of the corresponding points, whereas vertical,

or y-disparity is the like difference between the vertical

coordinates. The baseline of a stereo system is usually horizontal, so that

vertical disparities are small. With a canonical geometry of a stereo pair,

e.g. with an epopolar stereopair, the vertical disparity is absent.

A disparity map is a continuous 1-1 vector-valued function

d(x,y) = [dx,y, δx,y]

that maps the coordinates (x,y)

of binocularly visible points in one image to the coordinates

(x + dx,y, y + δx,y)

of the corresponding points in the other image of a stereo pair.

Its components are the horizontal, d, and vertical, δ,

disparities, respectively. In other words, the

disparity map relates the corresponding signals in both the images:

g1: x,y ↔ g2: x −

dx,y, y − δx,y.

The mapping is undefined for image regions with no

stereo correspondence, e.g. for partial occlusions due to depth discontinuities.

Search region

depends on the expected range of x- and, if exist, y-disparities. Let

[dmin, dmax]

and [δmin, δmax] be the range of x-

and y-disparities, respectively. Let (x, y) denote a pixel

position in the left image, g1. Then the search

region in the right image, g2, is {(x′,y′):

x − dmax ≤ x′ ≤

x − dmin;

y − δmax ≤ y′ ≤

y − δmin}.

Each binocularly visible 3-D area is represented by (visually) similar

corresponding regions in the images of a stereo pair, and stereo correspondences are

searched for by measuring similarity or dissimilarity between the image regions.

Human vision find these similarities easily and at least reliably, and this

easiness hides the principal ill-posedness of the binocular stereo,

namely, that there always exist a multiplicity of optical 3-D surfaces giving

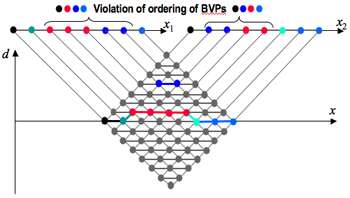

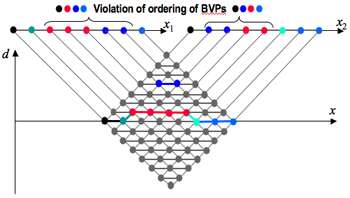

just the same stereo pair. Figure 1 below exemplifies a continuous digital epipolar profile

through the nodes in an epipolar (X,Z)-plane that represent possible correspondences

between the points in digital images of a stereo pair with integer x-coordinates:

Figure 1: Digital profile in an epipolar plane.

These nodes correspond to the admissible x-disparities and

zero y-disparity under the cyclopean (symmetric) X

and Y coordinates in the 3-D space; the cyclopean coordinate frame

has the origin in the midpoint of the baseline.

The blue, green, and black strokes indicate the binocularly visible parts of the profile,

the parts being visible only monocularly in the image 1, and the parts being

visible only monocularly in the image 2, respectively. For compactness, these

profiles are represented below on the cyclopean (x,d)-plane to show a few

distinct profile variants producing just the same stereo images:

Figure 2: Epipolar profiles

in (x,d) coordinates giving the same image signals.

Because the stereo problem is ill-posed, it is impossible to reconstruct

precisely the real 3-D scene from a single stereo pair. Stereo matching pursues a

more limited and thus more practical goal of bringing the reconstructed

surfaces close enough to those perceived visually or restored by

conventional photogrammetric techniques from the same stereo pair. Due to

a multiplicity of visually observed 3-D scenes, there is only a very

general prior knowledge about the optical 3-D surfaces to be found, e.g.

specifications of allowable smoothness, curvature, discontinuities, and so forth.

Return to the local table of contents

Return to the global table of contents

Under symmetric epipolar geometry, a disparity map represents a set of

epipolar profiles, points of each profile having the same y-coordinate.

To reconstruct a pro�file, only the signals along the conjugate scan-lines (with

the same y-coordinate in both images of a canonical stereo pair) have to be matched.

Both the scan-lines represent the intersection of the

image plane by an epipolar plane. The latter contains the stereo base-line which is

parallel to the image plane.

Let pixels along the conjugate scan-lines

in the images have integer x-coordinates and let the scan-lines themselves have

integer y-coordinates with unit steps. The disparity map contains in this case

only the x-disparities of the corresponding pixels. Figure 1 above shows a cross-section

of a digital surface by an epipolar plane. Lines o1x1

and o2x2 represent the corresponding

x-lines in the images. The correspondence between the signals in the profi�le points

and in the image pixels is given by the symbolic labels from "a"

to "k" Notice that all the profi�les in Figure 2 above give just the same

labels in the images provided that the signals for these profi�les have the shown

labels. Let [X,y,Z]T be a vector of symmetric 3-D

coordinates of a binocularly visible point.

Here, y = y1 = y2 denotes the y-coordinate

of the epipolar lines that indicates an epipolar plane containing the point and

[X,Z]T are Cartesian 2-D coordinates of

the point in this plane. The spatial

X-axis coincides with the stereo baseline linking the optical centres O1

and O2 of the cameras and is the same for all the planes. The Z-axis

is orthogonal to X-axis in the epipolar plane with the index y. The origin O

of the symmetric

(X,Z)-coordinates is

midway between the optical centres (rather than coincides with the centre O1 as

in the conventional asymmetric canonical geometry). If spatial positions of the origin O

and of the epipolar plane with the index y are known, the symmetric coordinates

[X,y,Z]T are easily converted into any given

Cartesian 3-D coordinate system.

Let pixels [x1,y]T

and [x2,y]T in the images

correspond to a 3-D point [X,y,Z]T. Then the

x-disparity, or x-parallax d = x1 − x2

is inversely proportional to the depth (distance,or height) Z of the point

[X,y,Z]T from the baseline OX:

d = bf/Z where b denotes the baseline length and f is the focal

length of the cameras.

Each digital profi�le in the epipolar plane y is a chain of the isolated 3-D points

[X,y,Z]T that correspond to the pixels

[x1,y]T

and [x2,y]T. If the 3-D points

[X,y,Z]T that relate to all the possible stereo

correspondences are projected onto the image plane through the "cyclopean" coordinate

origin O, they form an auxiliary "cyclopean" image which lattice has x-steps

of 0.5 and y-steps of 1. Symmetric stereo mappings in Figure 2 are obtained by replacing

the coordinates X and Z of digital profiles in the epipolar plane y with

the corresponding (x)-coordinate and x-disparity in the cyclopean lattice, respectively.

If the pixels [x1,y]T

and [x1,y]T

in a stereo pair correspond to a 3-D point with the planar cyclopean coordinates

[x,y]T, then the following simple relationships hold:

x1 = x + d/2; x2 = x − d/2

⇔ x = (x1 + x2)/2;

d = x1 − x2.

Figure 2 shows the epipolar plane of Figure 1 in the symmetric (x,d)-coordinates.

Return to the local table of contents

Return to the global table of contents

Stereo matching is governed by general constraints reflecting

intrinsic properties of stereo viewing and 3-D scenes observed:

- Epipolar constraint. Given the canonical geometry of stereo images in terms of

the conjugate pairs of epipolar scan-lines,

the search for stereo correspondences is reduced to an 1-D search along the lines (as

the matching points lie along them).

Typically, an arbitrary stereo pair of a 3D scene is rectified, i.e. transformed

to a canonical stereo geometry, by recovering the epipolar geometry so that the conjugate

epipolar lines form parallel scanlines in the rectified images.

This constraint considerably reduces the search region and thus the

computational complexity of stereo matching and excludes false matches between points

on different epipolar lines.

- Uniqueness constraint. Every 3-D point to be reconstructed is observed either

binocularly, or only monocularly so that every pixel in one stereo image has

either exactly one corresponding pixel in the other image, or no correspondence at all

(in the latter case it depicts a partial occlusion). This constraint may not

hold if the scene contains transparent or semi-transparent surfaces.

- Ordering constraint. If an epipolar profile is continuous, then its points

and the corresponding points in the conjugate epipolar lines in both stereo images

lie in the same order (see Figures 1 and 2). This constraint does not hold for 3-D scenes with multiple

disjoint surfaces:

- Disparity range. A range of depth values, i.e. a range of possible

disparities, dmin

≤ d ≤ dmax, is typically known for every 3-D scene

depicted in a stereo pair. This constraint reduces the

search region and excludes false matches between points outside the range.

- Continuity constraint. Observed surfaces are assumed to be smooth in most cases, i.e.

small local changes of disparities over the scene except for object boundaries.

To simplify stereo matching, some additional assumptions are sometimes added to the

above-mentioned restrictions, such as

- Equal corresponding signals. Under the assumption that observed 3-D surfaces have

Lambertian reflection (i.e. transmit outcoming light equally in all directions),

image signals (grey levels or colours) in corresponding points are exactly the same.

This constraint dominates in the current computer vision works on stereo reconstruction

to justify simple matching scores such as absolute or squared signal differences.

But it does not hold in most practical cases because natural surfaces are rarely

Lambertian.

- Frontal parallel surfaces. Area-based correlation matching implicitly or explicitly

assumes that 3-D surfaces observed by a stereo system with canonical geometry are frontal and

parallel to the image plane, i.e. disparities are constant for image regions depicting

each such surface. This assumption obviously does not hold for the surfaces with slopes and creases.

- Similarity of features. Feature-based matching assumes that corresponding

features such as edges, corners, contrast local areas, and so forth, and groups of such

features are similar and mutually consistent

in both images to within limited geometric deviations due to stereo viewing.

Return to the local table of contents

Return to the global table of contents

Eigenvalues and Eigenvectors

Eigenvectors and eigenvalues of a matrix A are solutions of the matrix-vector equation

Ae = λe, or (A−λI)e = 0 where I

is the n×n identity matrix with the components Iij = 1

if i = j and 0 otherwise. The latter equation suggests that the

determinant of the matrix A−λI is equal to zero so that the

eigenvalues are the solutions of the corresponding algebraic equation |A−λI| = 0.

If A is real-valued and symmetric n×n matrix,

Aij = Aji, it has N linearly independent

(or mutually orthogonal) eigenvectors e1, e2, …, en.

Typically, the eigenvectors are normalised

so that their dot product ei•ej = 1 if i=j

and 0 otherwise. Such eigenvectors are called orthonormal.

Each eigenvector en has its own eigenvalue λn such that

Aei = λiei. For example, let A be an arbitrary

2×2 symmetric real matrix with the components a, b, c:

.

.

Then its eigenvalues are the two solutions of the above quadratic equation:

.

.

For example, if a = c = 1 and b = 0 (the 2×2 identity matrix),

then both the eigenvalues are equal to 1:

.

.

Actually, in this case the matrix equations Ae = λe themselves do

not constrain the eigenvectors (they give only the identities

ej,i = ej,i for i,j = 1, 2), so that

only the constraints due to their orthonormality hold.

In this case there exist infinitely many possible pairs of eigenvectors of the following form:

e1 = [cos θ sin θ]T and

e2 = [−sin θ cos θ]T

where θ is an arbitrary angle, e.g. θ = 0 for the above pair

e1 = [1 0]T and

e2 = [0 1]T.

If a = c = 0 and b = 1, then λ1 = 1 and

λ1 = −1. The corresponding eigenvectors are obtained as follows:

.

.

Two more examples of computing eigenvalues and eigenvectors for particular

3×3 symmetric real matrices:

,

,

and

.

.

Additional information about eigenvalues and eigenvectors can be found in:

- Eigenvalue by

Eric W. Weisstein (from MathWorld - A Wolfram Web Resource; Wolfram Research)

- Eigenvector by

Eric W. Weisstein (from MathWorld - A Wolfram Web Resource; Wolfram Research)

-

Eigenvalues and Eigenvectors of a Matrix by Dr. T. Lepikult (Tallin Technical University, Estonia)

- PCA

Principal Component Analysiis and Dataset Transformation by R. B. Fisher (UK)

Eigendecomposition

Matrix diagonalisation (called also eigendecomposition) is defined for a square

n×n matrix A with N orthogonal eigenvectors such as

a symmetric real one.

Let E =[e1 e2 … en]

be the n×n matrix with the orthonormal eigenvectors of A as the columns, i.e.

Eij = ej,i.

Then AE = [λ1e1

λ2e1 … λnen]

is the matrix having as the columns the eigenvectors factored by their eigenvalues, i.e. the

matrix with the components

(AE)ij = λjej,i;

i,j = 1, …, N, where ej,i is the i-th

component of the j-th eigenvector.

In the transpose,

ET, the same eigenvectors

form the rows. Therefore, ETE =

I, so that the matrix E is orthogonal, that is,

its transpose, ET,

is the (left) inverse, E−1.

Hence ETAE = Λ where Λ

is the n×n diagonal matrix

Λ = diag{λ1, λ2,

…, λN}

with the components &Lambdaij = λi if i=j

and 0 otherwise.

Because every real symmetric matrix A is diagonalised by the transformation

ETAE = Λ, it is decomposed

into a product of the orthogonal and diagonal matrices

as follows: A = EΛET.

In particular,

.

.

Additional information about eigendecomposition can be found in:

- Eigen Decomposition by

Eric W. Weisstein (from MathWorld - A Wolfram Web Resource; Wolfram Research)

SVD is defined for a generic, rectangular matrix as follows: Any m×n

matrix A can be written as the product of three matrices:

A = UDVT. The columns of

the m×m matrix U are mutually orthogonal unit vectors,

as the columns of the n×n matrix V. The m×n

matrix D is diagonal; its diagonal elements, σi,

called singular values are such that σ1≥σ2≥

… ≥σN≥0. The matrices U and V are not unique,

but the singular values are fully determined by the matrix A.

The SVD has the following properties:

- A square matrix A is nonsingular if and only if all its singular values

are different from zero; the values σi show also how close

the matrix is to be singular: the ratio C=σ1/σn,

called condition number, measures the degree of singularity of A.

If 1/C is comparable with the arithmetic precision of a computer, the matrix

A is ill-conditioned and for all practical purposes should be

considered singular.

- If A is a rectangular matrix, the number of non-zero singular values

σi equals the rank of A. Given a fixed tolerance,

ε, being typically of order 10−6, the number of

singular values greater than ε equals the effective rank of A.

- If A is a square, nonsingular matrix, its inverse can be written as

A−1 =

VD−1UT.

Be A singular or not, the pseudoinverse of A,

A+, is A+ =

VD0−1UT,

with D0−1 equal to D−1 for

all nonzero singular values and zero otherwise. If A is nonsingular,

then D0−1 = D−1

and A+ = A−1.

- The columns of U corresponding to the nonzero singular

values span the range of A; the columns of V corresponding to

the zero singular value span the null space of A (see e.g. reference

materials on null space in Wikipedia or

lecture notes

on matrices prepared by Dr. T. Lepikult, Tallin Technical University, Estonia).

- The columns of U are eigenvectors of

AAT. The columns of V are

eigenvectors of ATA.

The squares of the nonzero singular values are the nonzero eigenvalues of both

the n×n matrix ATA

and m×m matrix AAT.

Moreover, Avi =

σiui and

ATui =

σivi, where

ui and vi are the

columns of U and V corresponding to σi.

- The Frobenius norm of a matrix A is the sum of the

squares of the entries aij of A, or

. It follows from the SVD

A = UDVT that

. It follows from the SVD

A = UDVT that

.

.

An example of the SVD of the symmetric singular square 3×3 matrix being used

earlier in the eigendecomposition is as follows:

.

.

The next example presents the SVD of a simple 3×2 rectangular matrix:

.

.

Note that there is another definition of SVD with the m×n matrix U

and n×n matrices D and V which is typically used

in computations (see e.g. W. H. Press, B. P. Flannery, S. A. Teukolsky, and W. T. Vetterling,

Numerical Recipes in FORTRAN: The Art of Scientific Computing, Cambridge University Press,

UK, 2nd ed., 1992: Section 2.6 "Singular Value Decomposition", pp. 51-63) because of

a smaller memory space for the matrices: mn + 2N2 rather that

m2 + mn + N2 for the initial definition

as typically N << m. The latter SVD definition is

used e.g. in the

C-subroutine svd() being

a very straighforward translation into C of the Fortran program developed in the Argonne

National Laboratory, USA, in 1983.

Additional information about SVD can be found in:

Least squares and pseudoinverse

Let an over-determined system of m linear equations,

Ax = b, have

to be solved for an unknown N-dimensional vector x. The m×n

matrix A contains the coefficients of the equations, and the m-dimensional

vector b contains the data. If not all the components of b are null,

the optimal in the least square sense and the shortest-length

solution x∗ of the

system is given by

x∗ = (ATA)+

ATb. The derivation is as follows:

.

.

If the inverse of (ATA) exists,

then the matrix A+ =

(ATA)−1

AT is the pseudoinverse, or

Moore-Penrose inverse.

If there are more equations than unknowns, the pseudoinverse is more likely to

coinside with the inverse of ATA,

but it is better to compute the pseudoinverse of

ATA through SVD to account for

the condition number of this matrix.

While only a square full-rank matrix A has the conventional inverse

A−1, the pseudoinverse A+ exists for

any m × n matrix; m > n. Generally, SVD (singular value decomposition)

is the most effective way of getting the pseudoinverse: if

A = UDVT where

the m×m matrix U and n×n matrix V are

orthogonal and the m×n matrix D is diagonal with real, non-negative

singular values σ1≥σ2≥ …

≥σN≥0, then

A+ =

V(DTD)−1

DTUT.

This and additional information about the pseudoinverse matrix can be found in:

Homogeneous Systems

Let one have to solve a homogeneous system of m linear equations in

N unknowns, Ax = 0 with m≥n−1 and

rank(A)=N−1. A nontrivial solution unique up to a

scale factor is easily found through SVD: this solution is proportional to

the eigenvector corresponding to the only zero eigenvalue of

ATA (all other

eigenvalues being strictly positive because the rank of A is equal

to N−1). This is proved as follows. Since the solution may have an

arbitrary norm, a solution of unit norm in the least square sense has to

minimise the squared norm ||Ax||2 =

(Ax)TAx =

xTAT

Ax, subject to the constraint

xTx = 1. This is

equivalent to minimise the Lagrangian L(x) =

xTAT

Ax − λ(xTx

− 1). Equating to zero the derivative of the Lagrangian with respect to x

gives ATAx −

λx = 0, so that λ is an eigenvalue of the matrix

ATA, and the solution,

x = eλ, is the corresponding eigenvector. The

solution makes the Lagrangian L(eλ)

= λ; therefore, the minimum is reached at λ = 0, the least

eigenvalue of ATA.

According to the properties of SVD, this solution could have been equivalently

established as the column of V corresponding to the only null singular value of

A (the kernel of A). This is why one need not distinguish between

these two seemingly different solutions of the same problem.

Matrix Estimates with Constraints

Let entries of a matrix, A, to be estimated satisfy some algebraic

constraints (e.g., A is an orthogonal or the fundamental matrix). Due

to errors introduced by noise and numerical computations, the estimated matrix,

say Aest, may not satisfy the given constraints. This may cause

serious problems if subsequent algorithms assume that Aest

satisfies exactly the constraints.

SVD allows us to find the closest matrix to Aest, in the

sense of the Frobenius norm, which satisfies the constraints exactly. The SVD

of the estimated matrix,

Aest = UDVT,

is computed, and the diagonal matrix D is replaced with

D′ obtained by changing the singular values of D to

those expected when the constraints are satisfied exactly (if Aest

is a good numerical estimate, its singular values should not be too far from

the expected ones). Then, the entries of the new estimated matrix,

A = UD′VT

satisfy the desired constraints by construction.

Return to the local table of contents

Return to the global table of contents

.

.

where

where

are points in homogeneous pixel coordinates.

Therefore,

are points in homogeneous pixel coordinates.

Therefore,

, and the above equation

, and the above equation

with the essential matrix E

yields

with the essential matrix E

yields  .

The matrix F is called fundamental matrix. Just as the

essential matrix, the fundamental matrix has rank 2 (since E has rank 2 and

Ml and Mr have full rank). The essential matrix

encodes only information on the extrinsic parameters of the stereo system, whereas

the fundamental matrix encodes information on both the extrinsic and intrinsic

parameters. Both matrices enable full reconstruction of the epipolar geometry.

.

The matrix F is called fundamental matrix. Just as the

essential matrix, the fundamental matrix has rank 2 (since E has rank 2 and

Ml and Mr have full rank). The essential matrix

encodes only information on the extrinsic parameters of the stereo system, whereas

the fundamental matrix encodes information on both the extrinsic and intrinsic

parameters. Both matrices enable full reconstruction of the epipolar geometry.

for the nine unknown entries

of F. These equations form a homogeneous linear system, and if the

n points do not form degenerate configurations, the nine entries of

F are determined as the nontrivial solution of the system. Since

the system is homogeneous, the solution is unique up to a signed scaling

factor. Typically, n > 8, so that the system is overdetermined and

its solution is obtained using singular value decomposition (SVD) related

techniques

(see

for the nine unknown entries

of F. These equations form a homogeneous linear system, and if the

n points do not form degenerate configurations, the nine entries of

F are determined as the nontrivial solution of the system. Since

the system is homogeneous, the solution is unique up to a signed scaling

factor. Typically, n > 8, so that the system is overdetermined and

its solution is obtained using singular value decomposition (SVD) related

techniques

(see  ,

,  ,

and

,

and  . In this case the average length of

each component of pi′ equals 1.

. In this case the average length of

each component of pi′ equals 1.

.

.

.

Then the rotation matrix

is obtained from the normalised essential matrix and translation

vector using simple algebraic computations.

.

Then the rotation matrix

is obtained from the normalised essential matrix and translation

vector using simple algebraic computations.

.

. .

.  .

.  .

.  ,

,  .

.

.

.

. It follows from the SVD

A = UDVT that

. It follows from the SVD

A = UDVT that

.

.

.

.  .

.

.

.